Hello, I'm

Didier Merk

AI/ML Researcher

A graduate in Artificial Intelligence from the University of Amsterdam, most recently working on time-series forecasting using Generative Pre-Trained Transformers.

A graduate in Artificial Intelligence from the University of Amsterdam, most recently working on time-series forecasting using Generative Pre-Trained Transformers.

I am a recently graduated student of the Artificial Intelligence master's programme at the University of Amsterdam. During the last year of my studies I worked at ING Bank, under supervision of Dr. Fabian Jansen and Yongtuo Liu, where I researched the application of Large Language Model architectures to financial time-series forecasting.

Before my MSc, I completed a major in AI as part of the Natural and Social Sciences Bachelor's programme, also at the University of Amsterdam. As part of my bachelor thesis I interned at international research organisation CERN, where I wrote my thesis on Hyperparameter Optimization for Jet Tagging.

I am enthusiastic and motivated, and have a strong mathematical background. In my free time I enjoy sports such as football, climbing and skiing, spending time with friends and travelling.

Two years master programme focused on mathematical and theoretical ground work of AI models. Graduated with an 8.2 GPA and my thesis was called "Rethinking Models and Evaluations for Time Series Forecasting".

Core courses included Advanced Machine Learning, Deep Learning, Natural Language Processing and Computer Vision. Specialized in time-series forecasting using foundation models.

Specialized major in Artificial Intelligence as part of the Natural and Social Sciences Bachelor's programme. Focused on fundamental AI principles, algorithms, and applications.

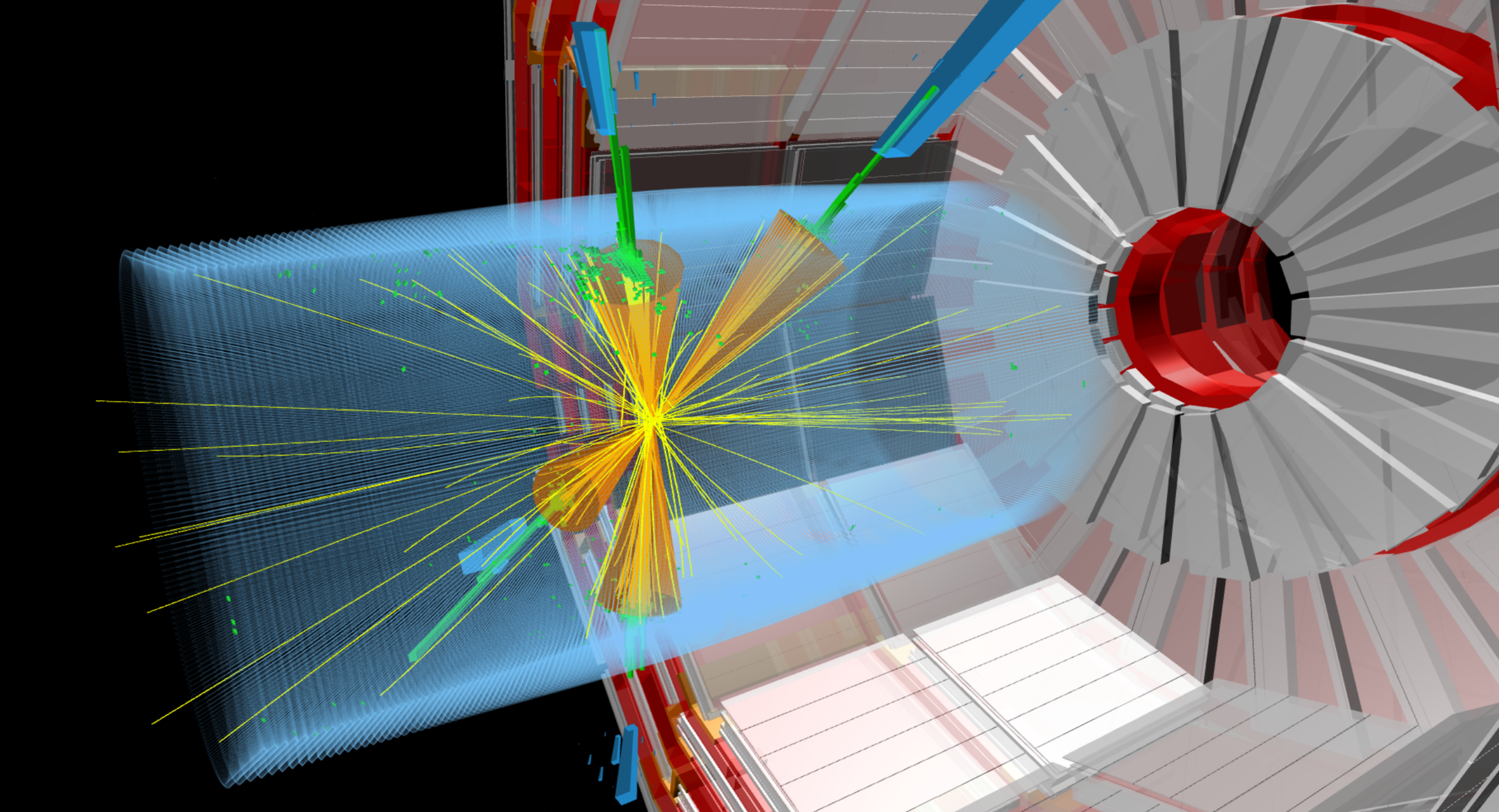

Completed coursework in Machine Learning, Neural Networks, Logic, and Data Structures & Algorithms. Final project involved developing a hyperparameter optimization system for particle physics at CERN.

Interdisciplinary programme combining natural sciences (physics, mathematics, biology) with social sciences (psychology, economics and urban planning). This broad foundation provided diverse perspectives and analytical approaches.

The programme's interdisciplinary nature helped develop critical thinking skills and the ability to approach complex problems from different angles, which proved valuable for later AI studies.

Enhanced existing visual grounding techniques by simultaneously generating images and precise segmentation masks directly from natural language prompts.

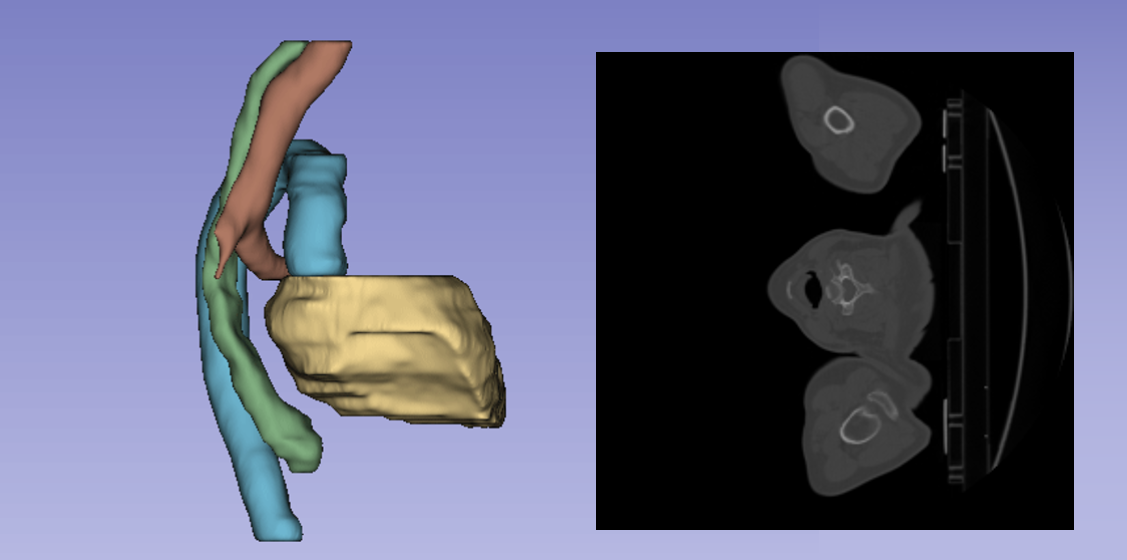

Produced state-of-the-art segmentation accuracy in CT scans of thoracic organs through optimized pre- and postprocessing and model tuning.

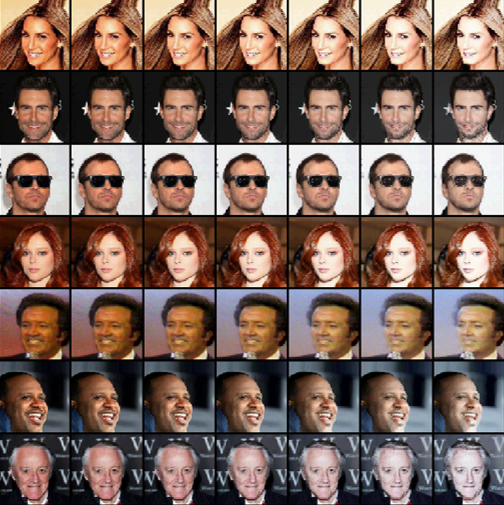

Reproduced and extended fairness-focused deep learning model LASSI, which uses generative modelling and representation learning to ensure individual fairness.

Optimized the Particle Transformer machine learning model used to identify particle collision jets in high-energy physics experiments at CERN.

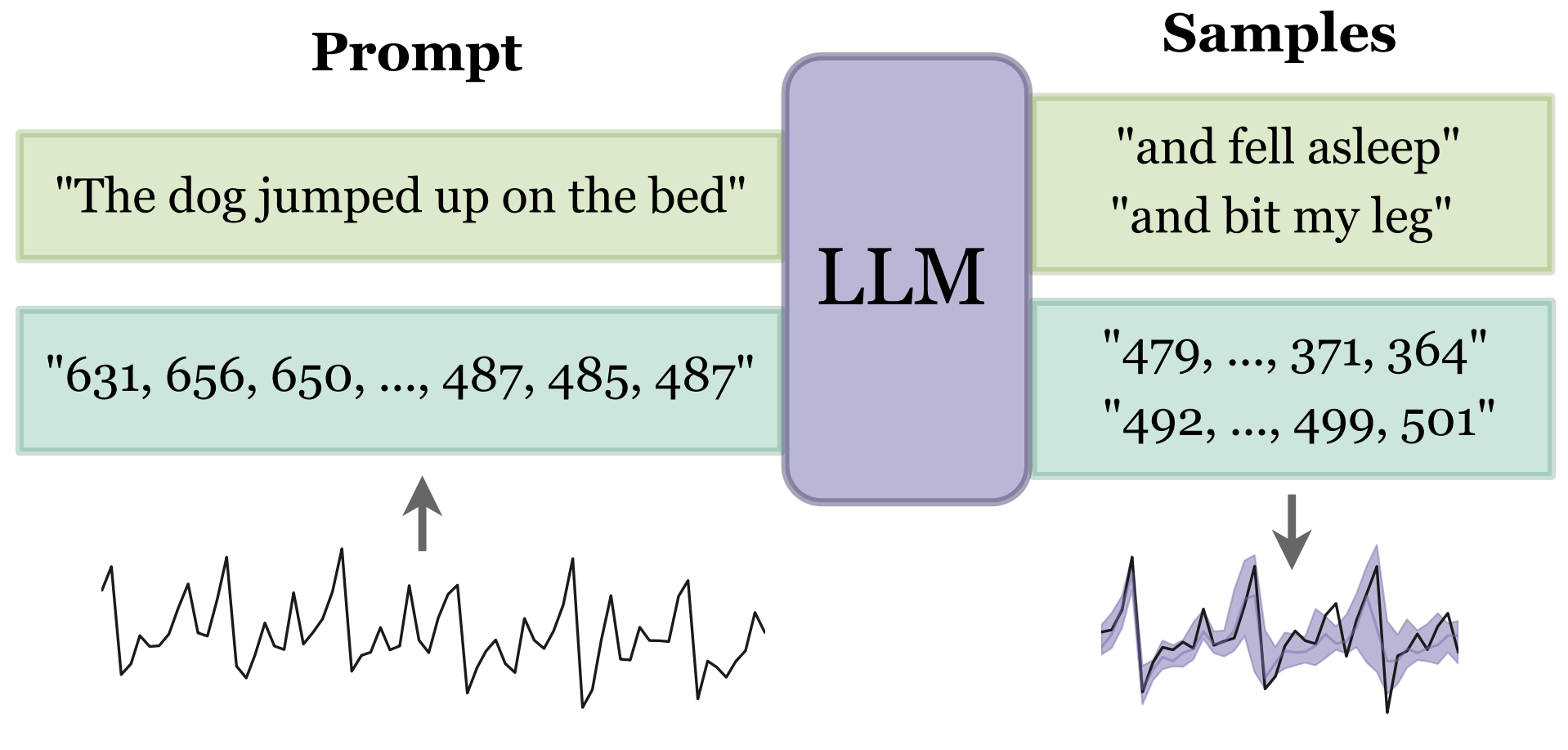

Studied the use of foundation models for forecasting transaction data, connecting advances in language modeling with time series prediction.

Fairness has become an increasingly more important topic within the AI community. Using recent advances in generative modelling and deep learning, researchers at DeepMind have proposed a novel representation learning method called LASSI. This model ensures that similar individuals are treated similarly, known as individual fairness. In this paper we aim to verify the original claims and extend the research by performing additional experiments to validate the robustness of LASSI.